Python Data Science Online Test

For jobseekers

Practice your skills and earn a certificate of achievement when you score in the top 25%.

Take a Practice TestFor companies

Screen real Python Data Science skills, flag human or AI assistance, and interview the right people.

About the test

The Python Data Science online test assesses knowledge of using Python and data science libraries such as Pandas, NumPy, Scipy, and Scikit-learn to analyze data through a series of live coding questions. This test requires applying probability and statistics to solve data science problems.

The assessment includes work-sample tasks such as:

- Classification of data using different algorithms.

- Aggregating, grouping, sorting, and cleaning data.

- Building machine learning models.

A good data scientist or data analyst using Python for their tasks should be able to take advantage of the functionality provided by Python data science libraries to extract and analyze knowledge and insights from data.

Sample public questions

Implement the desired_marketing_expenditure function, which returns the required amount of money that needs to be invested in a new marketing campaign to sell the desired number of units.

Use the data from previous marketing campaigns to evaluate how the number of units sold grows linearly as the amount of money invested increases.

For example, for the desired number of 60,000 units sold and previous campaign data from the table below, the function should return the float 250,000.

Previous campaigns

| Campaign | Marketing expenditure | Units sold |

|---|---|---|

| #1 | 300,000 | 60,000 |

| #2 | 200,000 | 50,000 |

| #3 | 400,000 | 90,000 |

| #4 | 300,000 | 80,000 |

| #5 | 100,000 | 30,000 |

As a part of an application for iris enthusiasts, implement the train_and_predict function which should be able to classify three types of irises based on four features.

The train_and_predict function accepts three parameters:

- train_input_features - a two-dimensional NumPy array where each element is an array that contains: sepal length, sepal width, petal length, and petal width.

- train_outputs - a one-dimensional NumPy array where each element is a number representing the species of iris which is described in the same row of train_input_features. 0 represents Iris setosa, 1 represents Iris versicolor, and 2 represents Iris virginica.

- prediction_features - two-dimensional NumPy array where each element is an array that contains: sepal length, sepal width, petal length, and petal width.

The function should train a classifier using train_input_features as input data and train_outputs as the expected result. After that, the function should use the trained classifier to predict labels for prediction_features and return them as an iterable (like list or numpy.ndarray). The nth position in the result should be the classification of the nth row of the prediction_features parameter.

For jobseekers: get certified

Earn a free certificate by achieving top 25% on the Python Data Science test with public questions.

Take a Certification TestSample silver certificate

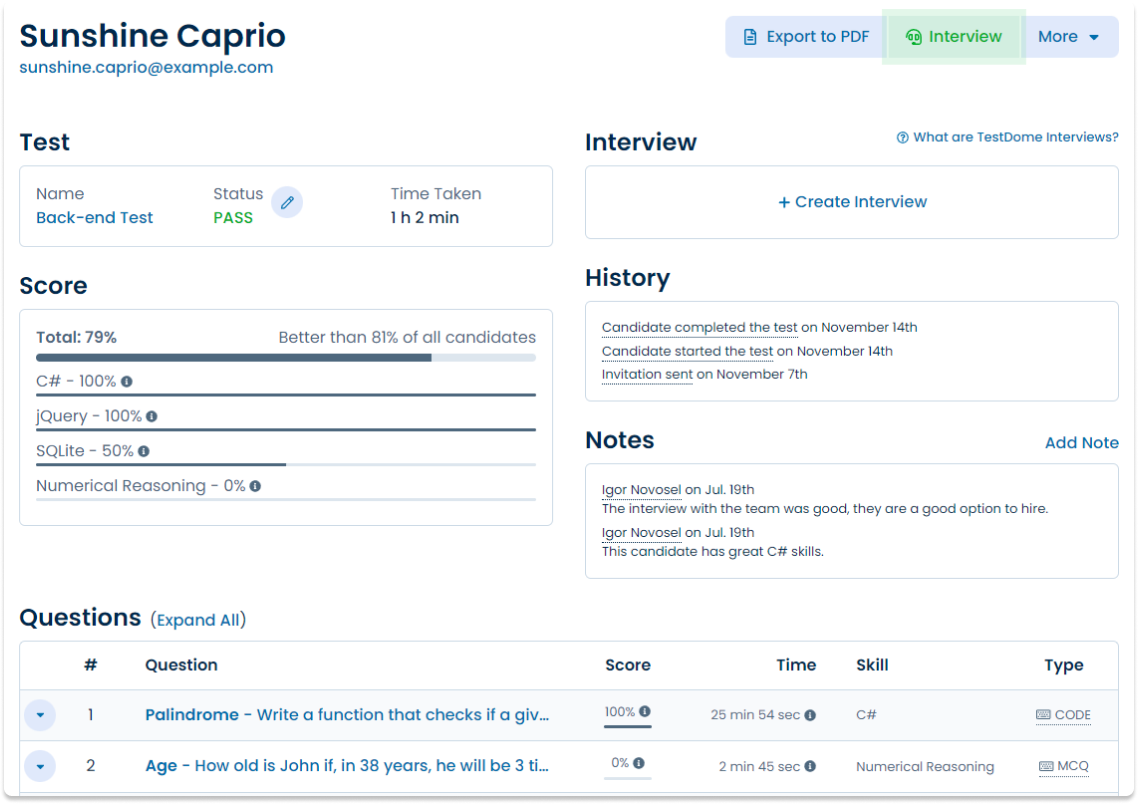

Sunshine Caprio

Java and SQL TestDomeCertificate

For companies: premium questions

Buy TestDome to access premium questions that can't be practiced.

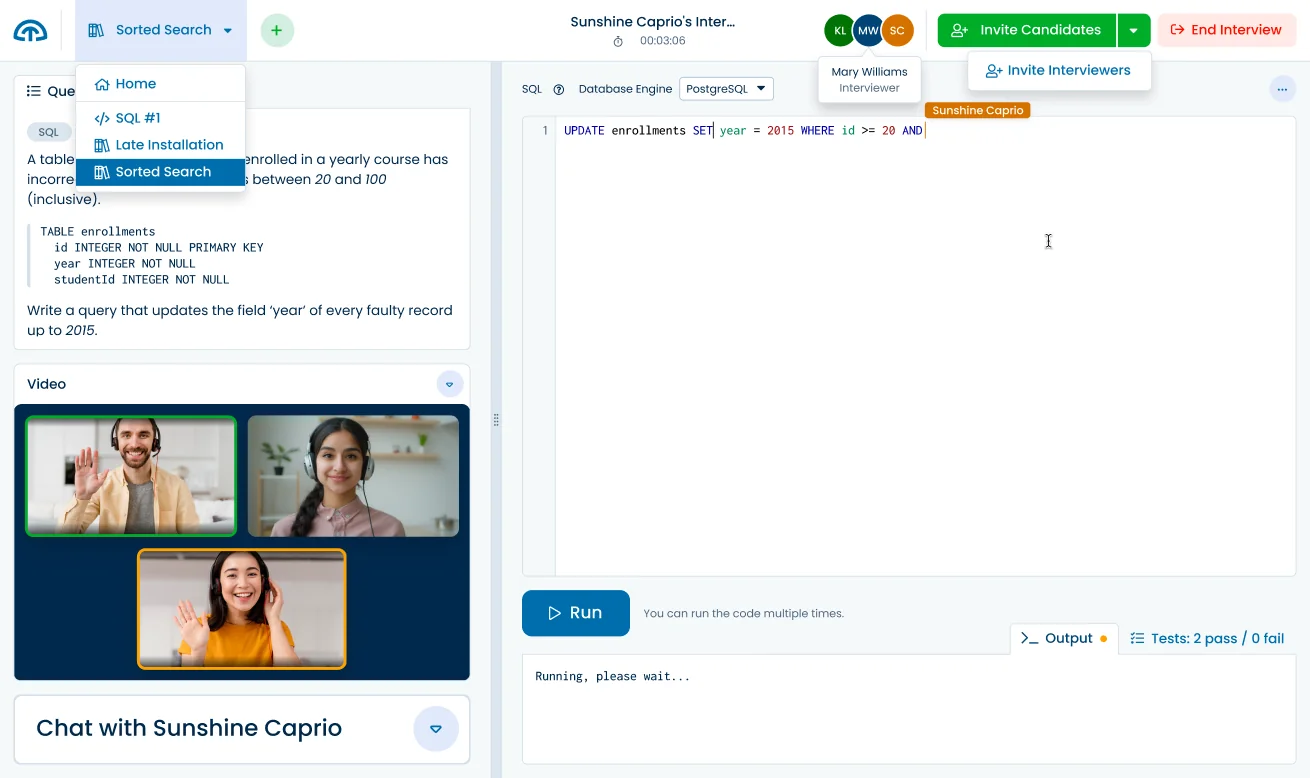

Ready to interview?

Use these and other questions from our library with our

Code Interview Platform.

8 more premium Python Data Science questions

Class Grades, Cubic Approximation, Birthday Cards, Free Throws, Distribution Fitting, Clean CSV, Median Height, Credit Score.

Skills and topics tested

- Python for Data Science

- Grouping

- NumPy

- Pandas

- Machine Learning

- Nonlinear Regression

- Scikit-Learn

- Sorting

- Data Aggregation

- Cauchy Distribution

- Exponential Distribution

- Normal Distribution

- SciPy

- Data Cleaning

- Processing CSV

- Classification

- K-Nearest Neighbors

For job roles

- Data Analyst

- Data Scientist

- Statistician

Sample candidate report

Need it fast? AI-crafted tests for your job role

TestDome generates custom tests tailored to the specific skills you need for your job role.

Sign up now to try it out and see how AI can streamline your hiring process!

What others say

Simple, straight-forward technical testing

TestDome is simple, provides a reasonable (though not extensive) battery of tests to choose from, and doesn't take the candidate an inordinate amount of time. It also simulates working pressure with the time limits.

Jan Opperman, Grindrod Bank

Product reviews

Used by

Solve all your skill testing needs

150+ Pre-made tests

130+ skills

AI-ready assessments

How TestDome works

Choose a pre-made test

or create a custom test

Invite candidates via

email, URL, or your ATS

Candidates take

a test remotely

Sort candidates and

get individual reports